A pioneering research demonstrates with Big Data the systematic relationship between language and gesture, which brings efficiency to the message.

More than 50% of the times that a temporary expression is used, it is accompanied by a certain gesture, according to work led by the University of Murcia and carried out together with the University of Navarra and the University of Tübingen.

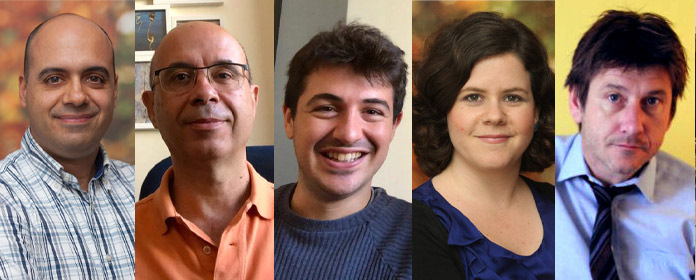

PHOTO:

A research has demonstrated for the first time with Big Data that there is a systematic relationship between the use of temporal expressions and their associated gestures, which brings efficiency to the message. This is the main conclusion of a work led by the University of Murcia and carried out in partnership with experts from the Institute for Culture and Society (ICS) of the University of Navarra and the University of Tübingen (Germany).

Specifically, in 69% of the occasions in which expressions referring to time are used -such as 'before', 'in the near future' or 'later'- these phrases are accompanied by a gesture. And in more than 50% of the cases, this gesture is closely related to the message: for example, marking an imaginary time line with the hands when saying 'from the beginning to the end'. These are some of the data reported in article, recently published in the high-impact journal PLoS ONE.

This study allows an approach to understand the instructions of human communication in a multimodal sense. In a normal conversation between several people, speech comes into play with its nuances of intonation, rhythm or regional accent. But also, in a face-to-face conversation, looks, gestures and even clothing come into play.

From the relationship of all these elements with language, in this case with temporal expressions, it is possible to extract cognitive patterns and learn how a communicative system that tends to efficiency is learned, which is fundamental to understand the human mind.

Improved IT systems such as virtual assistantsFrom agreement with the team researcher, these results will make it possible to extrapolate the mechanisms of human communication to development and improve computer systems that, like the virtual assistants Alexa or Siri, can establish a conversation with humans and understand gestures or certain expressions.

"Right now it is one of the great challenges of artificial intelligence: teaching a program to understand what a person says and does, to notice their intentions and give them a natural response," explains lead author Cristóbal Pagán, researcher of the University of Murcia and former member of the ICS. He stresses that understanding the instructions of human communication is the first step in developing efficient interfaces that facilitate the human-machine relationship.

For research , videos with speakers saying phrases with these characteristics were selected from thousands of hours of current affairs programs (debates, news programs or interviews) from the main US networks. This wide sample of different communicative contexts allows us to know what people think when they communicate, that is, if they unconsciously feel the need to provide more information.

Although all data are taken from U.S. television programs, the gist of the results can be extrapolated to other countries, Pagan says. The rate of gestures is expected to vary in the temporal expressions of other languages, but they will remain high and will increase for less frequent sentences, i.e., they will be guided by communicative efficiency.

Along with Cristóbal Pagán, the team researcher includesJavier Valenzuela and Daniel Alcaraz Carrión, from the University of Murcia; Inés Olza, from the ICS of the University of Navarra; and Michael Ramscar, from the University of Tübingen. They are part of the global laboratory network Hen Lab, which brings together experts from around the world in language, computer science and artificial intelligence. The network Hen Lab builds great digital resources to make research with Big Data.